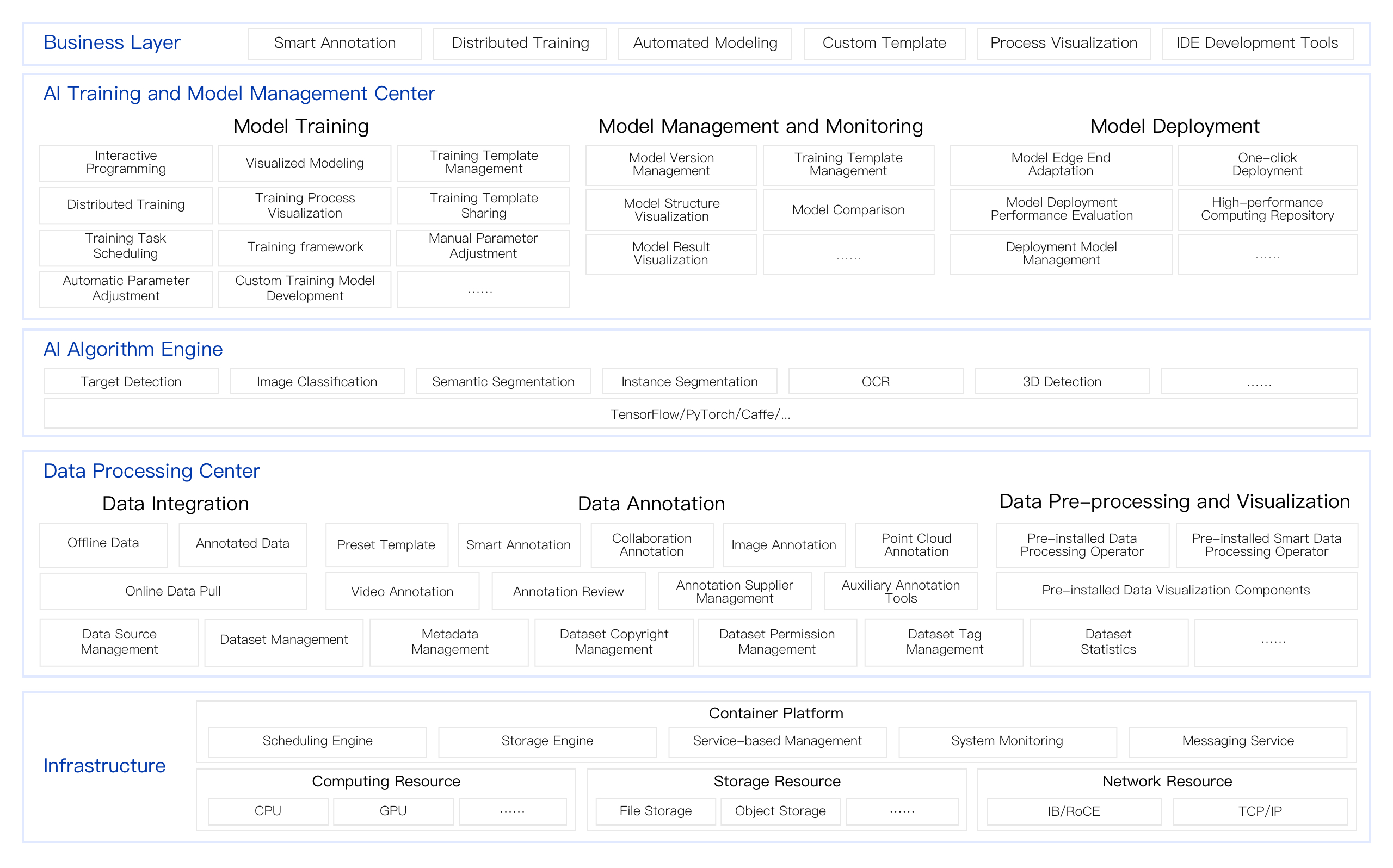

SenseTime's AI R&D solutions aim at the business pain points of the great majority of AI R&D personnel, provide full-stack AI R&D services from underlying computing power resources to platform tool applications, and realize consulting empowerment based on SenseTime's rich practical experience in the industry to accelerate the digital transformation of enterprises.

The AI R&D process involves tedious tasks such as data processing, operator development, model optimization, and edge-end adaptation, and also faces a large and continuous consumption of computing power. How to help millions of AI practitioners in China to obtain flexible and elastic computing resources, accelerate the efficiency of algorithm iteration, improve the output of training and inference models, and accumulate data, models, and codes is the common goal that SenseTime and even many AI companies continue to focus on.

Relying on the industry-leading practical experience, SenseTime's platform provides efficient algorithms and high-precision models for AI R&D personnel to meet the rapidly growing massive business needs.

Provide a low-code platform to facilitate rapid application development and help developers realize the rapid implementation of industry scenarios.

Provide a complete multi-tenant system and data privacy protection, and build a comprehensive AI R&D data security system from the I layer to the S layer.

For the rapidly growing business computing power demand, provide flexibleGPU and CPU computing power services of various specifications.

For the network requirements in the model development process, provide high-performance network services with a transmission rate of up to 200Gb per second.

For the storage requirements in the model training process, provide storage services that are optimized for the model development process.

For full-link data management, provide rich dataset management functions, automatic annotation tools, and intelligent data labels.

For large-scale distributed training, provide a self-developed deep learning training framework to meet the needs of high-performance distributed parallel training.

For the deployment of heterogeneous back-end devices, provide a unified deployment framework with heterogeneous chips to simplify the deployment process.

For the visual monitoring of the development process, provide full-link visual development support for model training, deployment, and evaluation.

The platform supports the parallel processing of large-scale data annotation tasks involving more than 1,000 people, meeting the needs of data management and sharing across departments and teams.

The platform has a built-in world-leading open source algorithm library, which supports the development of algorithms in multiple fields such as autonomous driving, smart city, and intelligent manufacturing.

The platform provides industry-leading distributed training support, which has heterogeneous computing power such as Nvidia and localization, provides flexible large-scale computing power scheduling strategies, and is deeply optimized for communication and computing of distributed tasks.

The platform provides a deployment framework that adapts to various devices such as ARM, X86, Nvidia, Huawei, and Cambricon, and generates a deployable SDK with one click to solve the “persistent problem” of deployment for developers.

Include mainstream and localizedGPUs, CPUs, and other computing power resources.

Include data services, training services, inference services, and other PaaS services required for AI R&D.

Include multi-faceted security services such as host machines, network layers, container layers, application layers, and data asset layers.

专业的AI解决方案、先进的AI产品助力您的业务实现新的突破